The Context Layer That Makes AI Agents Accurate

Problem

Why do AI Agents in the Enterprise Fail?

AI agents, powered by LLMs, are valuable in making critical decisions. However, the reality is that most fail to deliver consistent, reliable outcomes because they don’t understand your business.

Without enterprise context like business glossaries, entity definitions, relationships, rules, and policies, these AI agents hallucinate, misroute, and behave unpredictably.

Context Blindspots

Agents misread data because they do not understand business meaning. Eg: what “customer,” “manager,” or “revenue” actually represent.

Inconsistent Definitions

Counting active users as 7-day active in one agent vs 30-days in another agent leads to inconsistency and erodes trust.

Unscalable Prompts

Simply coding the entire context into every prompt is unscalable and leads to hallucinations due to context limits.

No Enterprise Policy Awareness

Access control rules would need to be embedded in every agent and leads to inconsistent governance

Enterprise

What Enterprises Need to Make AI Agents Work?

To move beyond pilots and deploy AI agents with real business impact, enterprises need more than just powerful models — they need context, governance, and control.

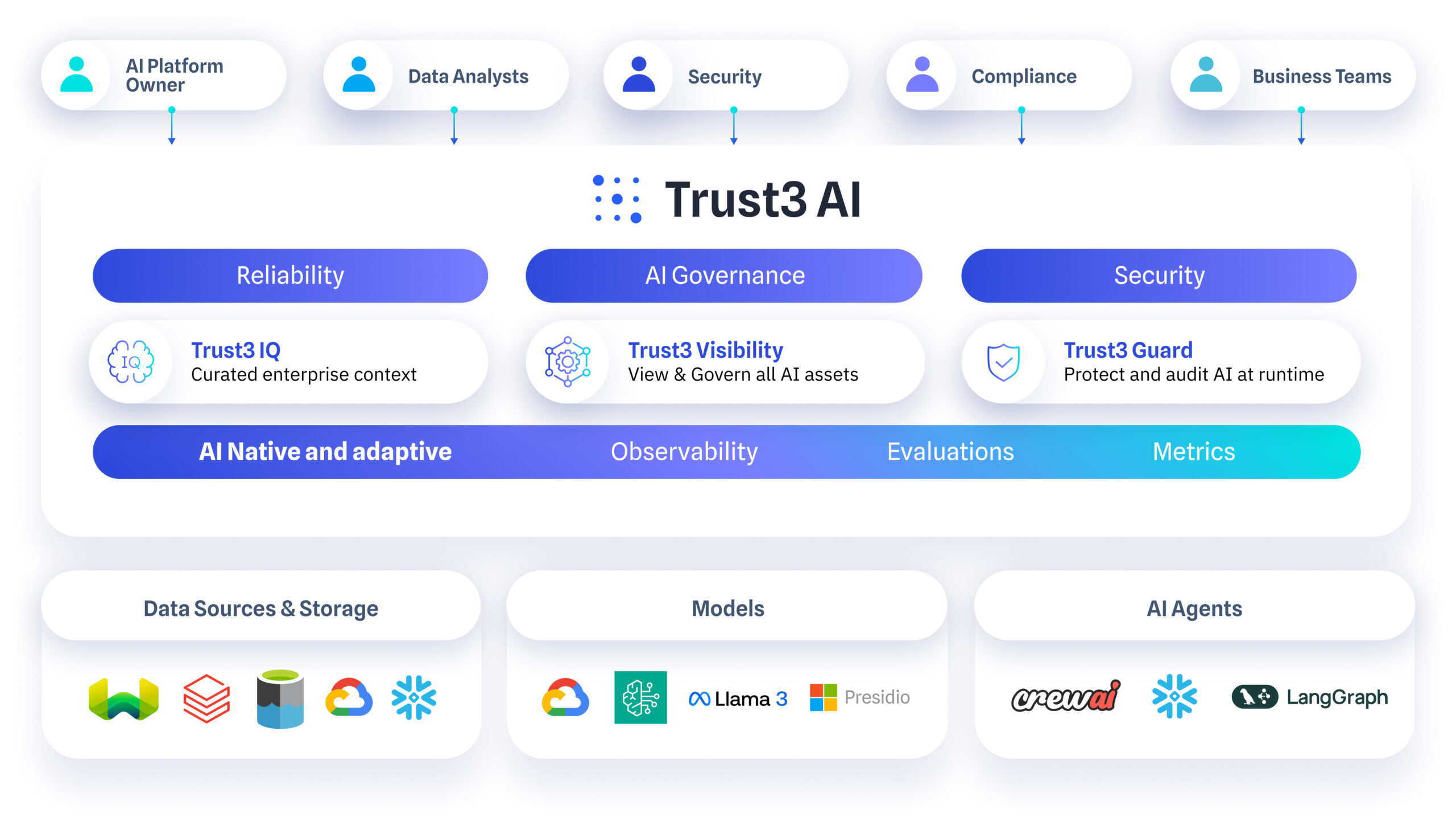

Trust3 IQ helps organizations build a solid foundation by delivering:

Reliability

Trust through structured semantics and scoped context — so agents produce consistent, accurate answers across systems.

Governance

Trust through clear definitions, lineage, and policy enforcement — so agents behave within enterprise boundaries.

Security

AI agents need more than data — they need business context.

Platform

The Enterprise Context Engine for AI Agents

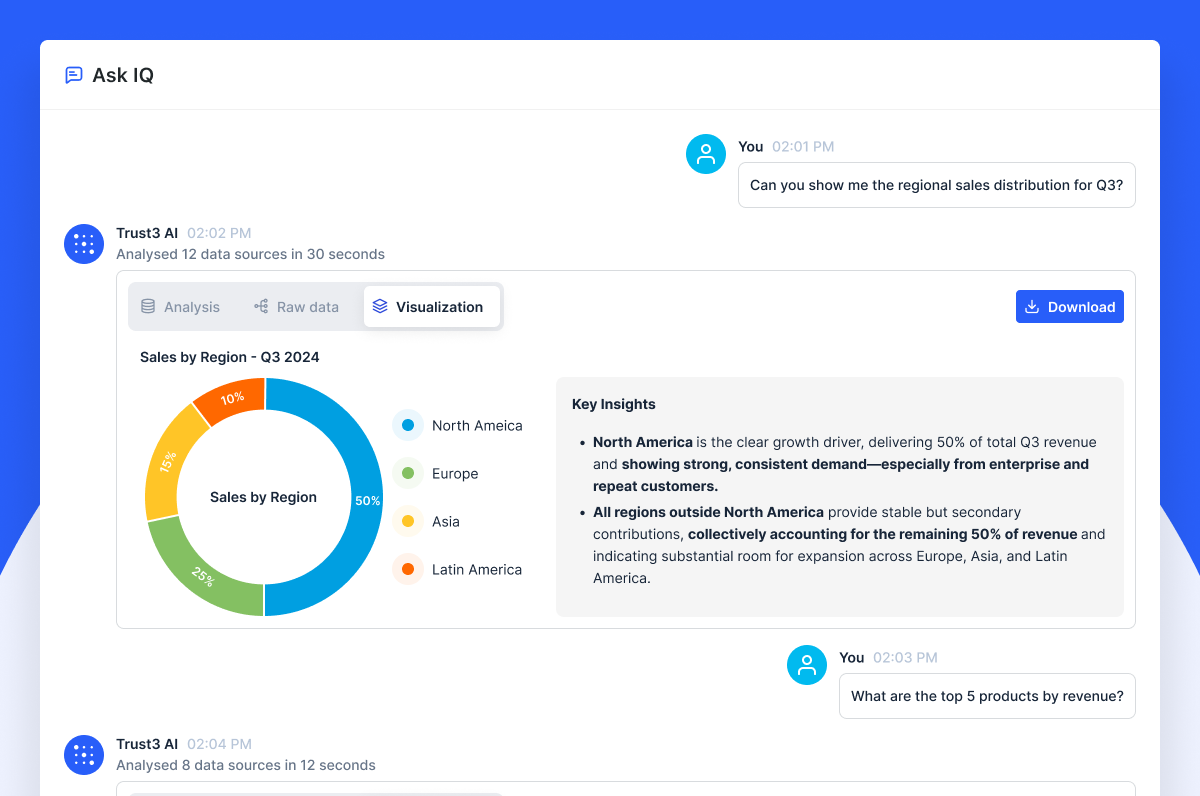

Trust3 IQ unifies structured and unstructured business data — and delivers the right context to every agent at runtime

Trust3 AI provides enterprise context engine:

Trust3 IQ delivers a real-time, governed context layer for your agents.

It connects structured data, semantics, and business policies from systems like Snowflake, Databricks, and SAP, and feeds only the relevant, scoped context to each agent or copilot — just in time. No prompt stuffing. No policy gaps.

Agents gain the ability to:

-

Reason with shared business meaning

-

Operate within clear purpose and access boundaries

-

Generate accurate, auditable, and compliant outputs

With Trust3, your agents behave like they know your enterprise — because now, they do.